As open data is gaining momentum an increasing number of organizations are thinking about ways to make their data available for others to use. Here are some thought on how to approach design issues when making open government data available.

TL;DR See if it is possible to publish your open data as file dumps instead of building advanced API:s that force entrepreneurs to integrate their apps with your infrastructure.

A fictional background

It was supposed to be a regular day for John at the server facility at the government weather agency. But when he came in to work that morning his colleague Mike was in a panic. -“Look! We are in the middle of a DDoS attack. The API-server is flooded and the database server is on it’s knees. The meteorologists can not work” . John started looking at server logs. Between 7 and 8 a.m. there was a sharp increase in traffic. Loads of API calls were made from a lot of different IP:s. Then, all of a sudden server load decreased and everything was back to normal.

What happened?

A year ago the weather agency had started to make their data available as part of the agency’s open government initiative. They were in a rush at the time and had decided to create an API for their weather data by setting up an internet-facing API-server. The API design had tried to take into consideration potential use cases that entrepreneurs may have but it was hard to know what people wanted. They had settled on three generic API calls.

Fast forward a year and they discover that one entrepreneur had built a very successful mobile app used by several hundred thousand users. Every morning it wakes you up by announcing today’s weather. To get the data necessary the app developer had to make two API calls which all of the installed apps did every morning to wake up their owners. That promptly crashed the API server which wasn’t designed to cope with the load.

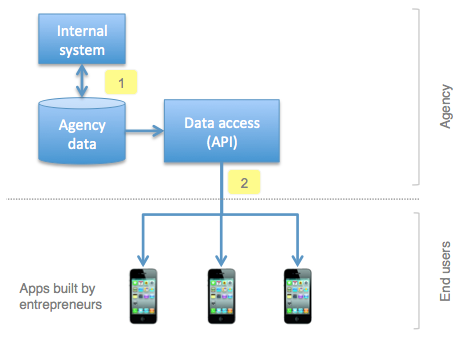

Let’s call this the Direct integration API model.

The Direct Integration API Model

The direct integration API model has several drawbacks:

- Since the API:s [2] are designed for direct integration this is what developers did. The government agency is now (unknowingly) a critical component in making sure the apps are working.

- By forwarding API calls to the one and only database server internal applications are affected when there is a high API load [1].

- High load from one application will impact all other applications using the same API infrastructure.

- API:s were designed from hypothetical use case scenarios forcing applications to make multiple API calls to get the data required.

Even if the data was offloaded to a separate database server to isolate external load from internal systems developers still need to rely on the capacity of the API .

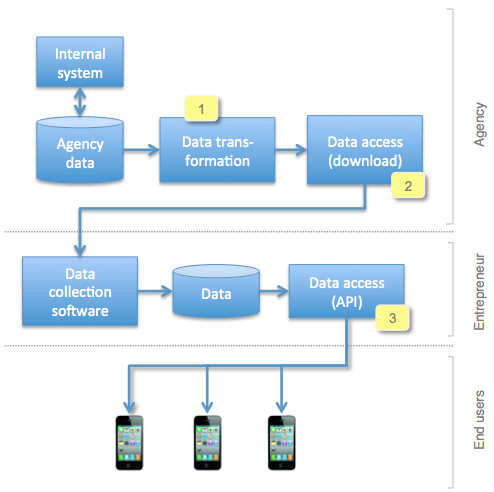

A better way to publish open government data

An alternative to the direct integration API model is to publish data dumps in files. “Boring!” may be the initial reaction from developers but they will thank you later. In this model data from the database is exported, transformed to an open readable format [1] (e.g. CSV), properly named and stored on the web server [2]. This means entrepreneurs can get all your data, load it into their own system and design their API according to their use case. Also, high loads will hit their own infrastructure without affecting other apps.

As an added bonus it is very simple to publish data dumps on a web server.

If files and URL:s are named consistently it is easy for entrepreneurs to pick up data over time (e.g. http://data.example.com/weather/country/2012-03-01.csv). An alternative is to create an API that is designed for mirroring of data and its changes (e.g. event sourcing over Atom).

These two models gives us some background for design considerations for publishing open data.

Design considerations

1. Do you really need an API? API projects can become expensive and typically compete with other IT projects that may have a higher priority. Also, designing an API involves making decisions on API use cases. Do you know how users will use your data? Will your API design prevent users from making efficient use of your data in their applications? What is your plan to cope with load?

2. Make it easy for entrepreneurs to keep a local copy of your data up to date. By providing wisely named data dumps it is simple to keep a local database up to date. More advanced scenarios include using existing protocols and technology for low latency distribution (e.g. pubsubhubbub).

3. Isolate internal systems from the effects of external data publishing.

4. Make sure you can change your technology without breaking URLs. People are building software that depends on your URL:s. Don’t force them to rewrite their software just because you are switching to a new platform. Early warning signs is the existence of platform-specific fragments like “aspx”, “jsp” in your URLs. Get rid of those.

There are of course other things to take into consideration such as semantic descriptions but that is a matter for a later post.

Hopefully this will save you both money and time as file exports may be a lot cheaper than creating API:s. What do you think?

Also see: Publishing Open Government Data by W3C

In the next blog post I will look at cases where an API makes a lot of sense (real time data, collaborative processes) .