For a long time I have been interested in thinking about what would happen if cameras could do more than capturing a somewhat realistic representation of the incoming light through a lens. I have experimented with long exposures, slit-scans and other ways of interpreting the world. But what if we could express a photographic vision in an algorithm we could use when capturing images?

We already have the tools to retouch images in post production but I am interested in finding out what happens when I implement an idea in an algorithm before any light is captured and then apply it in an environment. I think this could be called algorithmic photography and I have tried to create a definition and show some examples below.

Defining Algorithmic Photography

Algorithmic Photography is a photographic technique involving the design of an algorithm through which information is processed to create a photo. The design of the algorithm takes place before capturing information (e.g. taking a picture) and may involve simple or complex software to achieve the photographic purpose. Thus, algorithmic photography differs from manual retouching of photographs or the manual addition of digital effects in post processing.

An algorithmic camera is a camera system implementing a photographic idea in an algorithm, containing a capture device and an output device displaying a visual representation.

Examples of algorithmic photography include:

- Processing of an image through a black and white filter.

- Using an algorithm to detect faces and blur backgrounds to emulate a short depth of field.

- Processing of video to create a still image from a predefined algorithm.

- Use of artificial intelligence to generate new images from text augmented by photographic input.

It can be argued that almost all digital cameras and smartphone cameras in particular are algorithmic cameras as they in some way process photographic information through a predefined algorithm (e.g. blurring a background to create a better looking portrait or adjusting the moon on night time photos). However, with the increasingly availability of advanced AI models for visual perception photographers have a much wider range of algorithms to choose from.

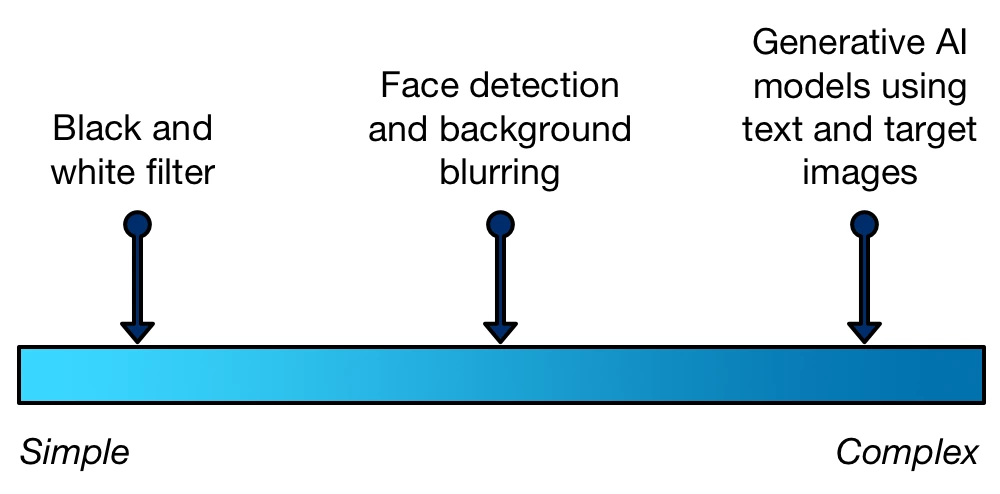

Fig 1. Algorithmic Photography algorithm complexity

Algorithmic photography could be considered as related to process art (“where the process of its making art is not hidden but remains a prominent aspect of the completed work”).

Creating Algorithmic Photography

Figure 2. Algorithmic Photography process

An idea for an algorithmic camera is sketched out in plain language and implemented in code. This results in an algorithmic camera which is used to capture images. The capture device does not necessarily have to be a regular camera. It could be some other device capturing data about the world. But no advanced post processing by a human take place.

In the simplest form one way to approach algorithmic photography is to describe an algorithm in plain language like this:

Step 1: Convert the image to black and white.

However, with the democratization of access to machine learning models and the necessary processing power it is now possible for almost anyone to design an algorithm that supports much more complex ideas.

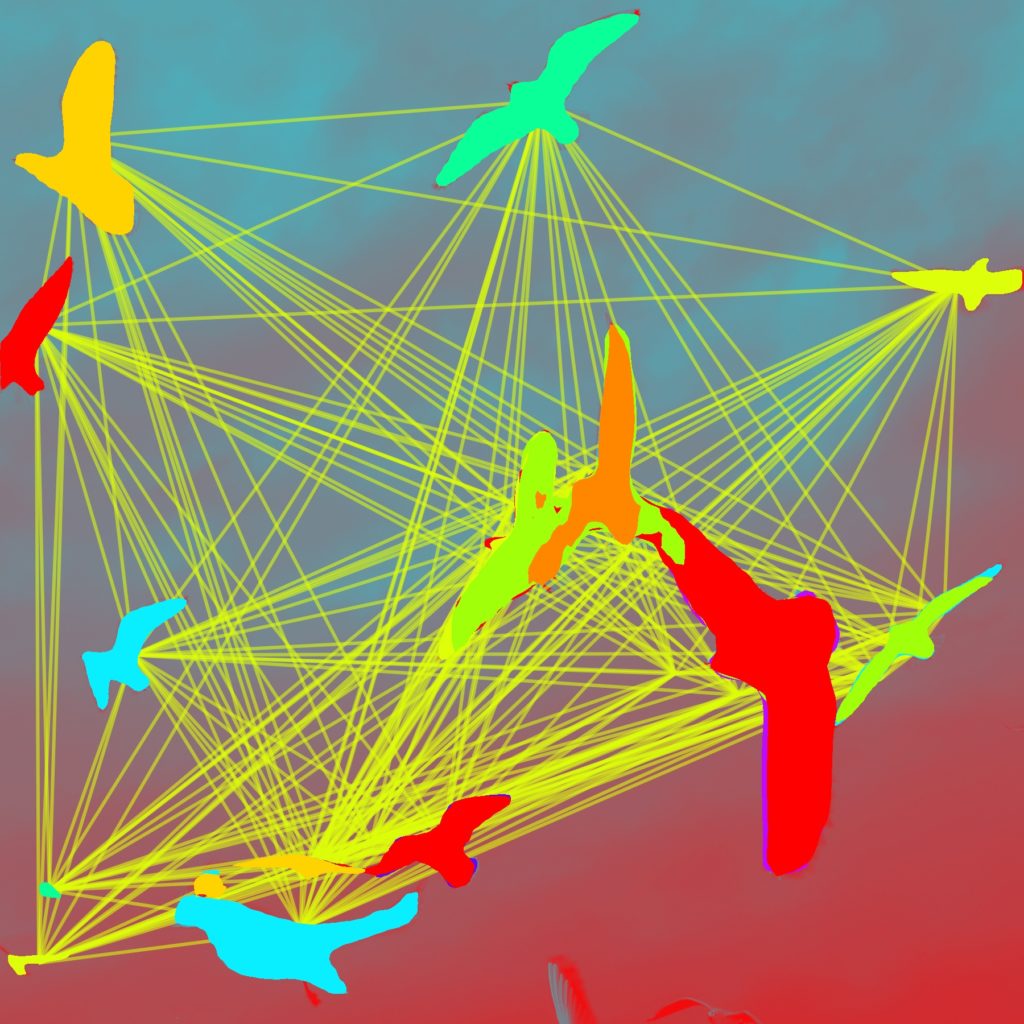

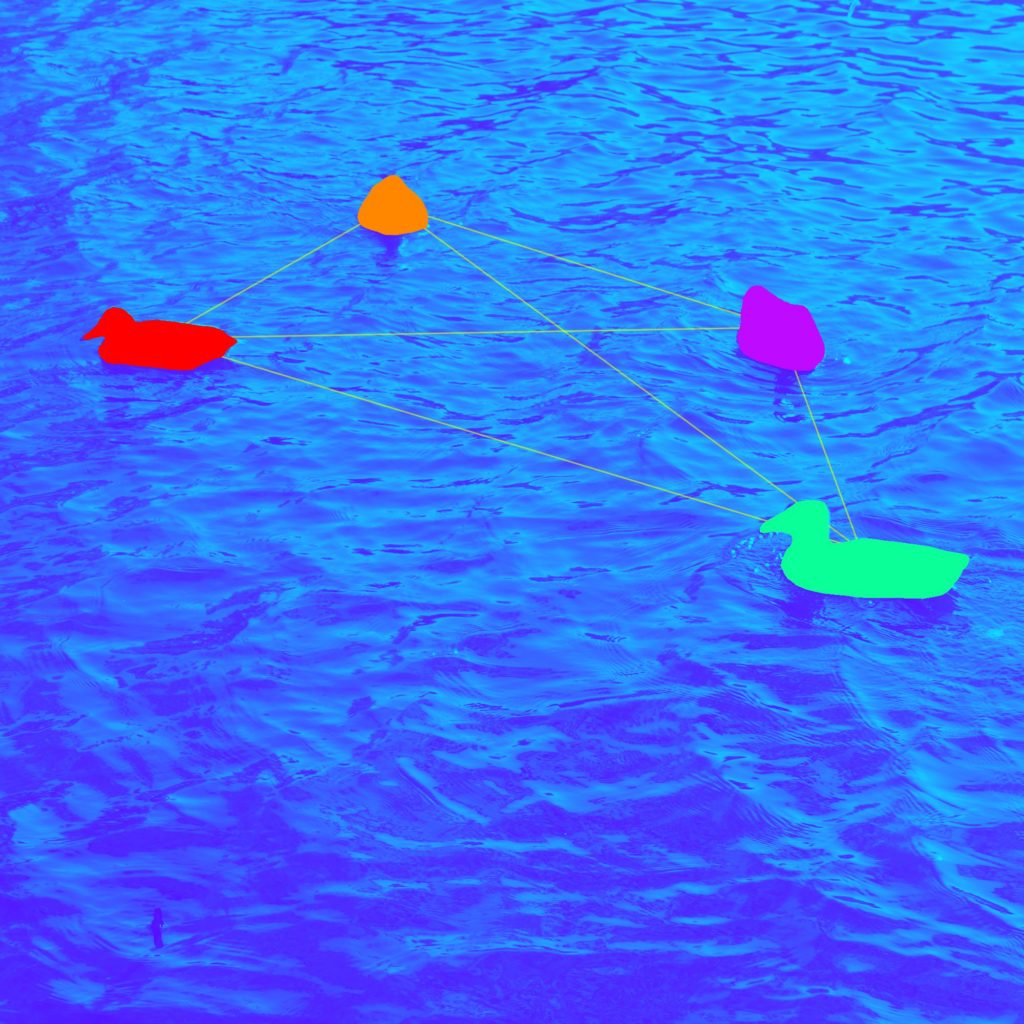

An example: The colorized connected bird algorithmic camera

This is an example of an algorithmic camera I made.

- Set up a 10 color palette with the following colors:

- Pick two random colors from the palette and colorize the image.

- For all birds in the image:

- Draw a yellow line from the center of the bird to all other birds.

- Cover the bird in a random color from the palette.

To implement this algorithm in software requires the use of multiple tools. For the example above I implemented the first two steps in the algorithm using PIL, the Python Image Library. I set my camera to square capture format and went out and took a picture:

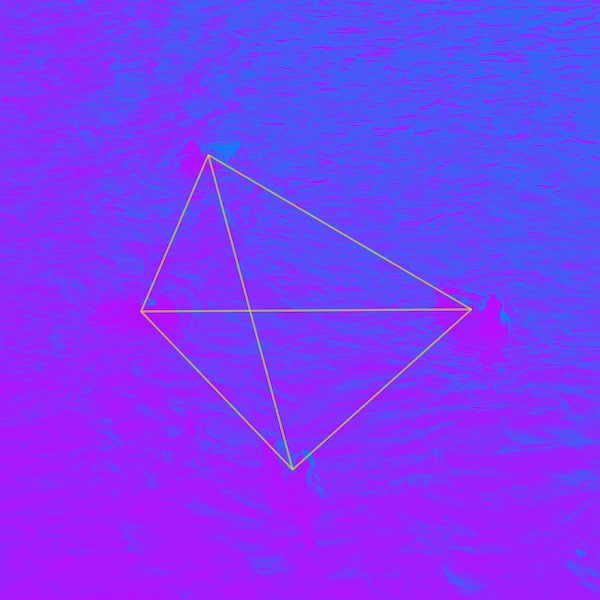

Fig 3. Square input image colorized using two random colors from the palette

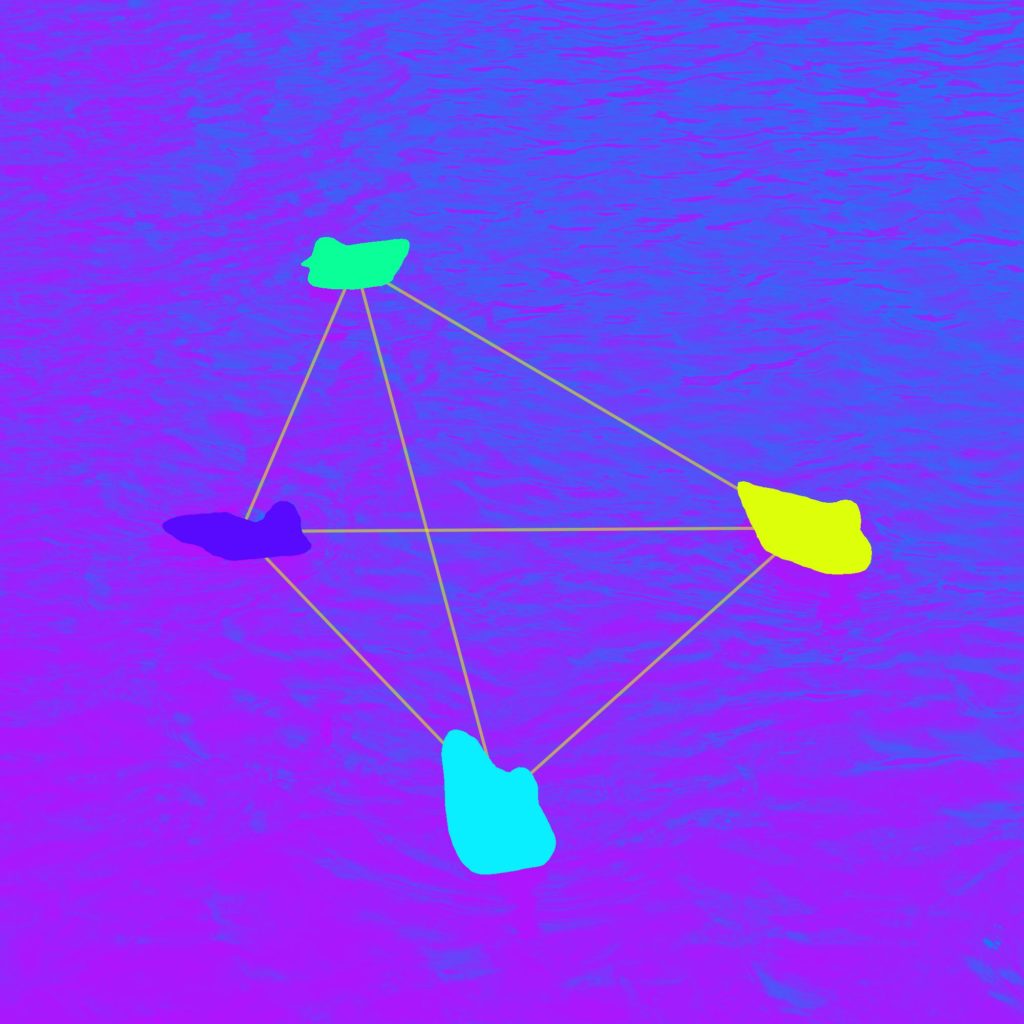

Implementing the following steps involves detecting birds and their outlines. Facebook AI Research provides a great library called Detectron 2 which comes with a multitude of pre-trained models for detection and segmentation. I used the Panoptic segmentation model on the input image and got box coordinates for each bird. Using these coordinates it was possible to connect all birds with a yellow line using the aggdraw library on the colorized image.

Fig 4. Birds detected and connected

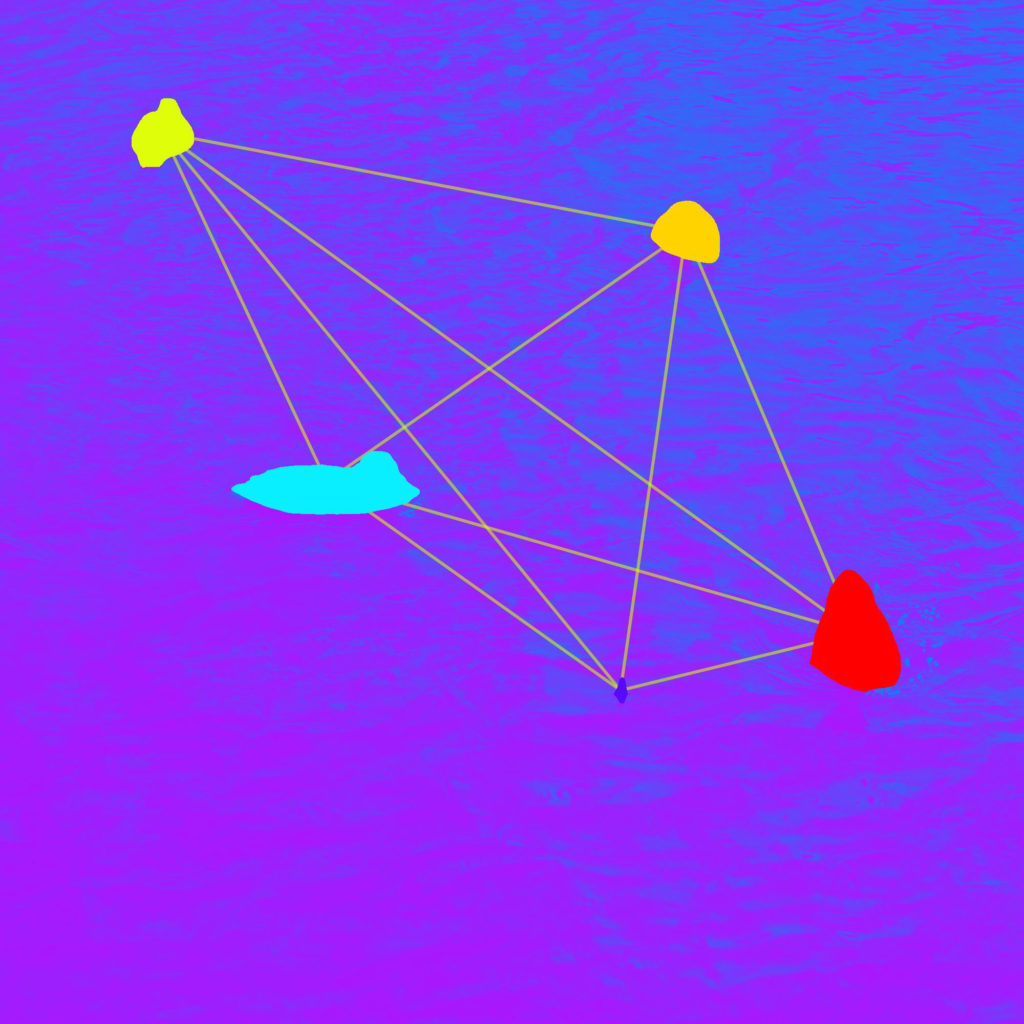

After connecting the birds with a yellow line it was possible to use the Detectron2 masks to cover each bird using the remaining colors in the palette.

Fig 5. The colorized connected bird algorithmic camera

Every time a picture is taken with the “colorized connected bird” camera the same algorithm is applied and similar output is produced.

Fig 6. More photographs from “the colorized connected bird algorithmic camera”.

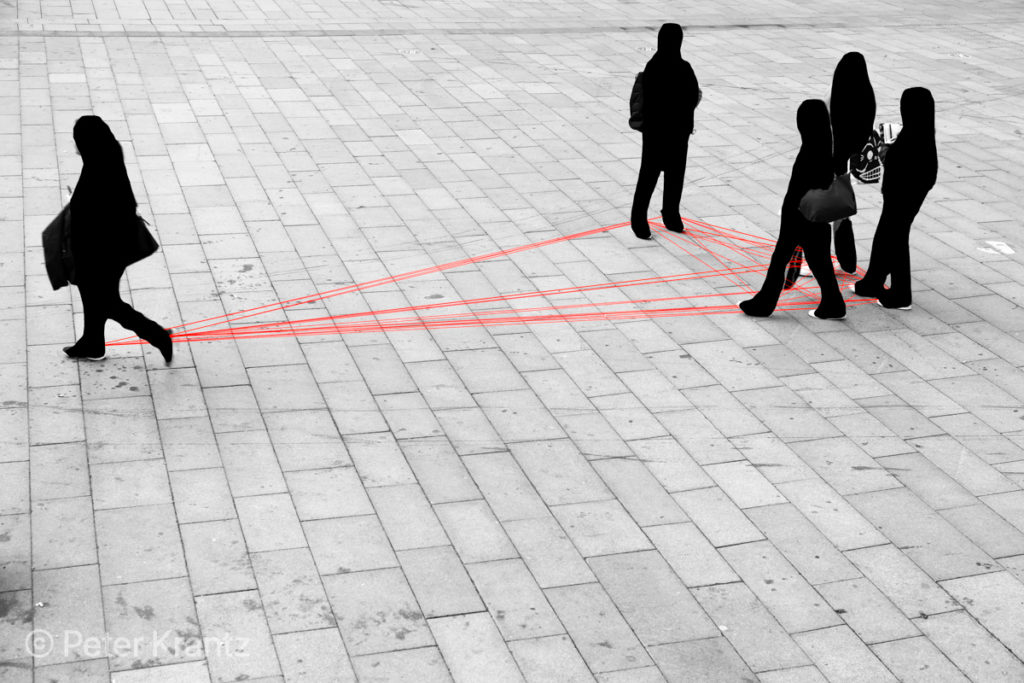

A different example: The feet connector algorithmic camera

Fig 6. Feet people - surveillance inversion

This algorithm involves detecting the feet of people using the COCO keypoints model for Detectron2. Given the availability of advanced models for object detection, the barrier to create more advanced algorithmic photography has been lowered. I hope this article may have inspired you to think about own ideas for algorithmic photography.

If you want to see more of my photographic experiments, follow me on Instagram here. I would love to know more about what your ideas are.